Listening with Lasers: Hybrid Technique Sees Into Human Body

A human skull, on average, is about 6.8 millimeters (0.3 inches) thick, or roughly the depth of the latest smartphone. Human skin, on the other hand, is about 2 to 3 millimeters (0.1 inches) deep, or about three grains of salt deep. While both of these dimensions are extremely thin, they present major hurdles for any kind of imaging with laser light.

Why? The photons in laser light scatter when they encounter biological tissue. Corralling tiny photons to obtain meaningful details about tissue has proven to be one of the most challenging problems laser researchers have faced to date. However, researchers at Washington University in St. Louis (WUSTL) decided to eliminate the photon roundup completely and use scattering to their advantage. The result: an imaging technique that would peer right into a skull, penetrating tissue at depths up to 7 centimeters (about 2.8 inches).

However, researchers at Washington University in St. Louis (WUSTL) decided to eliminate the photon roundup completely and use scattering to their advantage. The result: an imaging technique that would peer right into a skull, penetrating tissue at depths up to 7 centimeters (about 2.8 inches).

The photoacoustic effect

The approach, which combines laser light and ultrasound, is based on the photoacoustic effect, a concept first discovered by Alexander Graham Bell in the 1880s. In his work, Bell discovered that the rapid interruption of a focused light beam produces sound.

To produce the photoacoustic effect, Bell focused a beam of light on a selenium block. He then rapidly interrupted the beam with a rotating slotted disk. He discovered that this activity produced sound waves. Bell showed that the photoacoustic effect depended on the absorption of light by the block, and the strength of the acoustic signal depended on how much light the material absorbed.

“We combine some very old physics with a modern imaging concept,” said WUSTL researcher Lihong Wang, who pioneered the approach. Wang and his WUSTL colleagues were the first to describe functional photoacoustic tomography (PAT) and 3D photoacoustic microscopy (PAM). [Listening with Lasers: Hybrid Technique Sees Into Human Body ]

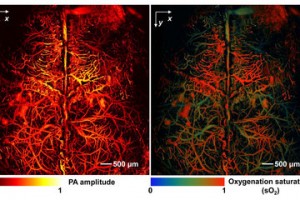

The two techniques follow the same basic principles: When the researchers shine a pulsed laser beam into biological tissue, the beam spreads out and generates a small, but rapid rise in temperature. This produces sound waves that are detected by conventional ultrasound transducers. Image reconstruction software converts the sound waves into high-resolution images.

Following a tortuous path

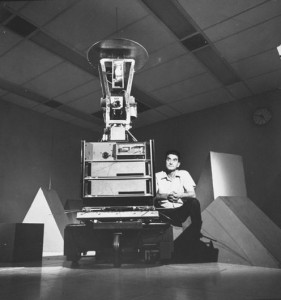

Wang began exploring the combination of sound and light as a postdoctoral researcher. At the time, he developed computer models of photons as they traveled through biological material. This work led to an NSF Faculty Early Career Development (CAREER) grant to study ultrasound encoding of laser light to “trick” information out of the laser beam.

Unlike other optical imaging techniques, photoacoustic imaging detects ultrasonic waves induced by absorbed photons, no matter how many times the photons have scattered. Multiple external detectors capture the sound waves regardless of their original locations. “While the light travels on a highly tortuous path, the ultrasonic wave propagates in a clean and well-defined fashion,” said Wang. “We see optical absorption contrast by listening to the object.”

Because the approach does not require injecting imaging agents, researchers can study biological material in its natural environment. Using photoacoustic imaging, researchers can visualize a range of biological material, from cells and their component parts to tissue and organs. Scientists can even detect single red blood cells in blood, or fat and protein deposits in arteries.

While PAT and PAM are primarily used in laboratory settings, Wang and others are working on multiple clinical applications. In one example, researchers use PAM to study the trajectory of blood cells as they flow through vessels in the brain.

“By seeing individual blood cells, researchers can start to identify what’s happening to the cells as they move through the vessels. Watching how these cells move could act as an early warning system to allow detection of potential blockage sites,” said Richard Conroy, director of the Division of Applied Science and Technology at the U.S. National Institute of Biomedical Imaging and Bioengineering.

Minding the gap

Because PAT and PAM images can be correlated with those generated using other techniques, such as magnetic resonance imaging (MRI) or positron emission tomography (PET), these techniques are complementary. “One imaging modality can’t do everything,” said Conroy. “Comparing results from different modalities provides a more detailed understanding of what is happening from the cell level to the whole animal.”

The approach could help bridge the gap between animal and human research, especially in neuroscience.

“Photoacoustic imaging is helping us understand how the mouse brain works,” said Wang. “We can then apply this information to better understand how the human brain works.” Wang, along with his team, is applying both PAT and PAM to study mouse brain function.

One of the challenges currently facing neuroscientists is the lack of available tools to study brain activity, Wang said. “The holy grail of brain research is to image action potentials,” said Wang. (An action potential occurs when electrical signals travel along axons, the long fibers that carry signals away from the nerve cell body.) With funding from the U.S. BRAIN Initiative , Wang and his group are now developing a PAT system to capture images every one-thousandth of a second, fast enough to image action potentials in the brain.

“Photoacoustic imaging fills a gap between light microscopy and ultrasound,” said Conroy. “The game-changing aspect of this [Wang’s] approach is that it has redefined our understanding of how deep we can see with light-based imaging,” said Conroy.

References:http://www.livescience.com/