Fifty years of Shakey, the “world’s first electronic person”

Timelapse image of Shakey in action

Robots are increasingly becoming part of our everyday lives and many roboticists believe that we are on the verge of a robot revolution that will do for goods and services what the Internet did for information. If so, then a lot of the credit goes to a 50 year old box on wheels called Shakey: the “world’s first electronic person.”

In the mid-1960s, computers were undergoing the first big jump in their evolution since “electronic brains” became practical in the 1940s. Computer architecture was much more sophisticated, scientists and engineers had a better grasp of the technology, transistors were shrinking mainframes so they filled a room instead of a really big room, and some researchers were convinced that computers and robots would soon be able to enter real world settings.

Among these was Charlie Rosen, one of the pioneers in the field of artificial intelligence and founder of SRI International’s Artificial Intelligence Center (then the Stanford Research Institute). He believed that computer simulations had reached the stage where they made it possible to produce problem-solving robots capable of working in factories.

At the same time ARPA, the ancestor of today’s DARPA, was interested in finding ways to use robots and artificial intelligence for military reconnaissance. One idea was that it might be possible to build some sort of a robotic scout for the Army, so, along with the National Science Foundation, and the Office of Naval Research, the agency hired SRI to look into such a scout.

The result was Shakey.

Officially, the timeline for project Shakey ran from 1966 to 1972, but like all complicated endeavors, the origins go back a bit further as the SRI team, consisting of project manager Rosen, Peter Hart, Marty Tenenbaum, Nils Nilsson, and others, gathered and drew up outlines and proposals for the project. Hart recently gave a keynote address at the ICRA conference in Seattle calling this year the 50th anniversary – and he was there.

Dates aside, what was remarkable about the Shakey project wasn’t just what it accomplished, but also but what its ambitions showed about the state of robotics. What Rosen et. al. set out to do indicated that they were either decades ahead of their time, or were in way over their heads.

The purpose of Shakey wasn’t just to produce a robot, but also one that was mobile, autonomous, equipped with vision, capable of mapping its surroundings, could solve problems, and could be addressed in natural-language English. Even today, combining only a fraction of these would ambitious. To combine them all using 1966 technology would have given Dr Frankenstein pause, but Shakey went on to become the first robot to combine motion, perception, and problem solving in one mobile package.

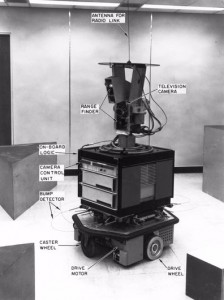

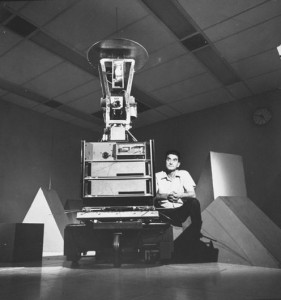

But why was it called Shakey? The answer was in its construction. Looking like a very early draft of R2D2, Shakey was a stack of gear. On the bottom was a motorized platform containing the drive wheels, push bar, and cat’s whiskers bump sensors. Above this was the electronic components box, then the “head” consisting of a black and white television camera, microphone, and a spinning prism rangefinder with the radio antenna on the very top. This arrangement was practical, but not very stable, hence the name.

According to Rosen: “We worked for a month trying to find a good name for it, ranging from Greek names to whatnot, and then one of us said, ‘Hey, it shakes like hell and moves around, let’s just call it Shakey,.'”

Even standing about five feet tall, Shakey didn’t seem very large until someone informed you that he (the team always called it “he”) actually weighed several tons. The visible part was just his mobile extension. The actual brain filled an entire room in another part of the lab with a second computer acting as a control interface. Originally, the mainframe was a 64K SDS-940 computer programmed in Fortran and Lisp, which was later replaced with a 192K PDP-10 around 1969. To put that into perspective, there are handheld games today with much more power than both of these put together.

Shakey lived in his own little world, which was made up of a series of rooms, doors, and objects. The walls had baseboards and everything was painted white and red to provide contrast for the monochromatic vision system. The robot’s job was to navigate these rooms, planning how to carrying out tasks, and solving any problems that might arise,

Early versions of Shakey envisioned manipulators, but this was later deemed unnecessarily complicated, so the final design worked by pushing objects around.

Much like other computers of the day, Shakey was given commands by teletype and responded via teletype and cathode ray tube. The robot was controlled by a tiered series of actions, which allowed Shakey to assess situations and find answers to problems.

At the bottom were low-level action programs, which were given to Shakey in plain English with commands like “go,” “pan,” and “tilt.” These were automatically translated into predicate calculus and provided the building blocks for intermediate-level actions, such as “go to” that told the robot to go to a particular location.

Telling Shakey where to go may seem simple, but if the location was in another room or behind an obstacle, that required him to set up subgoals or way points with a route to reach them. It was even more complicated if Shakey was told to find an object and move it somewhere else.

Shakey navigated by dead reckoning. That is, he counted turns of the drive wheels and deduced his location. However, this accumulated errors, so Shakey had to back it up with visual information. His pattern recognition algorithms could pick things like object outlines or room corners, allowing him to build up a map of his world and adjust his navigation.

The key to this was STRIPS (Stanford Research Institute Problem Solver), which is an artificial intelligence language for automated planning that allowed Shakey to map actions and subgoals to chart a plan of action. This also gave him the ability to recover from accidents, such as a misplaced box, an unknown room, an unexpected obstacle, or an obstructed door, and planning moves to recover from it, as well as learning from past mistakes by combining commands, intermediate actions, and preconditions to produce new actions.

Eventually, Shakey was able to solve surprisingly complex puzzles, such as the monkey and banana problem. In this, Shakey had to move a box on to a platform, which meant he had to figure out how to move a ramp into position first. It took the robot days to accomplish with many false starts and repetitions, but at the time, it was an unprecedented example of robotics and artificial intelligence.

The reaction to Shakey by the public and even by scientists was one of sensation. Shakey was described in Life magazine in 1970 as “the first electronic person,” and thinkers like Marvin Minsky were seriously predicting true artificial intelligence of superhuman power within three to eight years on the strength of Shakey’s performance.

If nothing else, it’s a cautionary tale about overestimating the state of the art. Bear in mind that this was a time when it was commonly believed that an invincible chess-playing computer would be built any day now instead of decades into the future – contemporary chess computers were lucky if they could manage a legal game, much less win one.

The Shakey project carried on until 1972 when the Defense Department started to get impatient for results. One general was even reported to ask: “Can you mount a 36-inch bayonet on it?” Unfortunately, despite remarkable advances, Shakey was still a creature of the laboratory and funding dried up.

Today, Shakey is on display at the Computer History Museum in Mountain View, California. However, it’s fair to say that without Shakey, today’s robots wouldn’t have been possible.

“Shakey, the first mobile robot to reason about its actions, was groundbreaking not only to robotics, but to artificial intelligence as well, as it led to fundamental advances in visual analysis, route finding, and planning of complex actions,” says Ray Perrault, PhD, director of SRI International’s Artificial Intelligence Center. “Shakey also helped open the possibilities of computer science to the public’s imagination, and put SRI’s Artificial Intelligence Center on the map.”

More information about Shakey is available at SRI International and a video showing the robot in action can be found here.

References:http://www.gizmag.com/