Unseen areas are troublesome for police and first responders: Rooms can harbor dangerous gunmen, while collapsed buildings can conceal survivors. Now Bounce Imaging, founded by an MIT alumnus, is giving officers and rescuers a safe glimpse into the unknown.

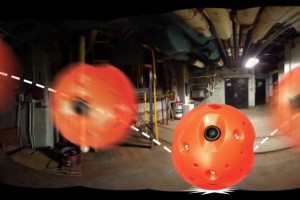

In July, the Boston-based startup will release its first line of tactical spheres, equipped with cameras and sensors, that can be tossed into potentially hazardous areas to instantly transmit panoramic images of those areas back to a smartphone.

“It basically gives a quick assessment of a dangerous situation,” says Bounce Imaging CEO Francisco Aguilar MBA ’12, who invented the device, called the Explorer.

Launched in 2012 with help from the MIT Venture Mentoring Service (VMS), Bounce Imaging will deploy 100 Explorers to police departments nationwide, with aims of branching out to first responders and other clients in the near future.

The softball-sized Explorer is covered in a thick rubber shell. Inside is a camera with six lenses, peeking out at different indented spots around the circumference, and LED lights. When activated, the camera snaps photos from all lenses, a few times every second. Software uploads these disparate images to a mobile device and stitches them together rapidly into full panoramic images. There are plans to add sensors for radiation, temperature, and carbon monoxide in future models.

For this first manufacturing run, the startup aims to gather feedback from police, who operate in what Aguilar calls a “reputation-heavy market.” “You want to make sure you deliver well for your first customer, so they recommend you to others,” he says.

Steered right through VMS

Over the years, media coverage has praised the Explorer, including in Wired, the BBC, NBC, Popular Science, and Time—which named the device one of the best inventions of 2012. Bounce Imaging also earned top prizes at the 2012 MassChallenge Competition and the 2013 MIT IDEAS Global Challenge.

Instrumental in Bounce Imaging’s early development, however, was the VMS, which Aguilar turned to shortly after forming Bounce Imaging at the MIT Sloan School of Management. Classmate and U.S. Army veteran David Young MBA ’12 joined the project early to provide a perspective of an end-user.

“The VMS steered us right in many ways,” Aguilar says. “When you don’t know what you’re doing, it’s good to have other people who are guiding you and counseling you.”

Leading Bounce Imaging’s advisory team was Jeffrey Bernstein SM ’84, a computer scientist who had co-founded a few tech startups—including PictureTel, directly out of graduate school, with the late MIT professor David Staelin—before coming to VMS as a mentor in 2007.

Among other things, Bernstein says the VMS mentors helped Bounce Imaging navigate, for roughly two years, in funding and partnering strategies, recruiting a core team of engineers and establishing its first market—instead of focusing on technical challenges. “The particulars of the technology are usually not the primary areas of focus in VMS,” Bernstein says. “You need to understand the market, and you need good people.”

In that way, Bernstein adds, Bounce Imaging already had a leg up. “Unlike many ventures I’ve seen, the Bounce Imaging team came in with a very clear idea of what need they were addressing and why this was important for real people,” he says.

Bounce Imaging still reaches out to its VMS mentors for advice. Another “powerful resource for alumni companies,” Aguilar says, was a VMS list of previously mentored startups. Over the years, Aguilar has pinged that list for a range of advice, including on manufacturing and funding issues. “It’s such a powerful list, because MIT alumni companies are amazingly generous to each other,” Aguilar says.

The right first market

From a mentor’s perspective, Bernstein sees Bounce Imaging’s current commercial success as a result of “finding that right first market,” which helped it overcome early technical challenges. “They got a lot of really good customer feedback really early and formed a real understanding of the market, allowing them to develop a product without a lot of uncertainty,” he says.

Aguilar conceived of the Explorer after the 2010 Haiti earthquake, as a student at both MIT Sloan and the Kennedy School of Government at Harvard University. International search-and-rescue teams, he learned, could not easily find survivors trapped in the rubble, as they were using cumbersome fiber-optic cameras, which were difficult to maneuver and too expensive for wide use. “I started looking into low-cost, very simple technologies to pair with your smartphone, so you wouldn’t need special training or equipment to look into these dangerous areas,” Aguilar says.

The Explorer was initially developed for first responders. But after being swept up in a flurry of national and international attention from winning the $50,000 grand prize at the 2012 MassChallenge, Bounce Imaging started fielding numerous requests from police departments—which became its target market.

Months of rigorous testing with departments across New England led Bounce Imaging from a clunky prototype of the Explorer—”a Medusa of cables and wires in a 3D-printed shell that was nowhere near throwable,” Aguilar says—through about 20 further iterations.

But they also learned key lessons about what police needed. Among the most important lessons, Aguilar says, is that police are under so much pressure in potentially dangerous situations that they need something very easy to use. “We had loaded the system up with all sorts of options and buttons and nifty things—but really, they just wanted a picture,” Aguilar says.

Neat tricks

Today’s Explorer is designed with a few “neat tricks,” Aguilar says. First is a custom, six-lensed camera that pulls raw images from its lenses simultaneously into one processor. This reduces complexity and reduces the price tag of using six separate cameras.

The ball also serves as its own wireless hotspot, through Bounce Imaging’s network, that a mobile device uses to quickly grab those images—”because a burning building probably isn’t going to have Wi-Fi, but we still want … to work with a first responder’s existing smartphone,” Aguilar says.

But the key innovation, Aguilar says, is the image-stitching software, developed by engineers at the Costa Rican Institute of Technology. The software’s algorithms, Aguilar says, vastly reduce computational load and work around noise and other image-quality problems. Because of this, it can stitch multiple images in a fraction of a second, compared with about one minute through other methods.

In fact, after the Explorer’s release, Aguilar says Bounce Imaging may option its image-stitching technology for drones, video games, movies, or smartphone technologies. “Our main focus is making sure the [Explorer] works well in the market,” Aguilar says. “And then we’re trying to see what exciting things we can do with the imaging processing, which could vastly reduce computational requirements for a range of industries developing around immersive video.”

References:http://phys.org/