World’s Thinnest Light Bulb Created from Graphene

Graphene, a form of carbon famous for being stronger than steel and more conductive than copper, can add another wonder to the list: making light.

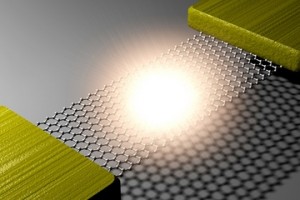

Researchers have developed a light-emitting graphene transistor that works in the same way as the filament in a light bulb.

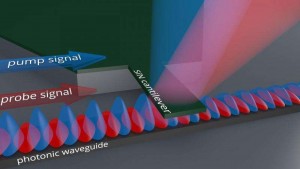

“We’ve created what is essentially the world’s thinnest light bulb,” study co-author James Hone, a mechanical engineer at Columbia University in New York, said in a Scientists have long wanted to create a teensy “light bulb” to place on a chip, enabling what is called photonic circuits, which run on light rather than electric current. The problem has been one of size and temperature — incandescent filaments must get extremely hot before they can produce visible light. This new graphene device, however, is so efficient and tiny, the resulting technology could offer new ways to make displays or study high-temperature phenomena at small scales, the researchers said. [8 Chemical Elements You’ve Never Heard Of]

Making light

When electric current is passed through an incandescent light bulb’s filament — usually made of tungsten — the filament heats up and glows. Electrons moving through the material knock against electrons in the filament’s atoms, giving them energy. Those electrons return to their former energy levels and emit photons (light) in the process. Crank up the current and voltage enough and the filament in the light bulb hits temperatures of about 5,400 degrees Fahrenheit (3,000 degrees Celsius) for an incandescent. This is one reason light bulbs either have no air in them or are filled with an inert gas like argon: At those temperatures tungsten would react with the oxygen in air and simply burn.

In the new study, the scientists used strips of graphene a few microns across and from 6.5 to 14 microns in length, each spanning a trench of silicon like a bridge. (A micron is one-millionth of a meter, where a hair is about 90 microns thick.) An electrode was attached to the ends of each graphene strip. Just like tungsten, run a current through graphene and the material will light up. But there is an added twist, as graphene conducts heat less efficiently as temperature increases, which means the heat stays in a spot in the center, rather than being relatively evenly distributed as in a tungsten filament.

Myung-Ho Bae, one of the study’s authors, told Live Science trapping the heat in one region makes the lighting more efficient. “The temperature of hot electrons at the center of the graphene is about 3,000 K [4,940 F], while the graphene lattice temperature is still about 2,000 K [3,140 F],” he said. “It results in a hotspot at the center and the light emission region is focused at the center of the graphene, which also makes for better efficiency.” It’s also the reason the electrodes at either end of the graphene don’t melt.

As for why this is the first time light has been made from graphene, study co-leader Yun Daniel Park, a professor of physics at Seoul National University, noted that graphene is usually embedded in or in contact with a substrate.

“Physically suspending graphene essentially eliminates pathways in which heat can escape,” Park said. “If the graphene is on a substrate, much of the heat will be dissipated to the substrate. Before us, other groups had only reported inefficient radiation emission in the infrared from graphene.”

The light emitted from the graphene also reflected off the silicon that each piece was suspended in front of. The reflected light interferes with the emitted light, producing a pattern of emission with peaks at different wavelengths. That opened up another possibility: tuning the light by varying the distance to the silicon.

The principle of the graphene is simple, Park said, but it took a long time to discover.

“It took us nearly five years to figure out the exact mechanism but everything (all the physics) fit. And, the project has turned out to be some kind of a Columbus’ Egg,” he said, referring to a legend in which Christopher Columbus challenged a group of men to make an egg stand on its end; they all failed and Columbus solved the problem by just cracking the shell at one end so that it had a flat bottom.

References:http://www.livescience.com/