Brillo as an underlying operating system for Internet of Things

The Project Brillo announcement was one of the event’s highlights making news at Google’s I/O conference last week. Brillo fundamentally is Google’s answer to the Internet of Things operating system. Brillo is designed to run on and connect various IoT low-power devices. If Android was Google’s answer for a mobile operating system, Brillo is a mini, or lightweight, Android OS–and part of The Register’s headline on the announcement story was “Google puts Android on a diet”.

Brillo was developed to connect IoT objects from “washing machine to a rubbish bin and linking in with existing Google technologies,” according to The Guardian.

As The Guardian also pointed out, they are not just talking about your kitchen where the fridge is telling the phone that it’s low on milk; the Brillo vision goes beyond home systems to farms or to city systems where a trashbin could tell the council when it is full and needs collecting. “Bins, toasters, roads and lights will be able to talk to each other for automatic, more efficient control and monitoring.”

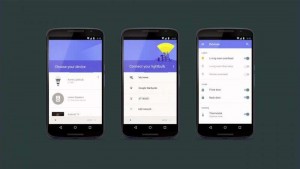

Brillo is derived from Android. Commented Peter Bright, technology editor, Ars Technica: “Brillo is smaller and slimmer than Android, providing a kernel, hardware abstraction, connectivity, and security infrastructure.” The Next Web similarly explained Brillo as “a stripped down version of Android that can run on minimal system requirements.” The Brillo debut is accompanied by another key component, Weave. This is the communications layer, and it allows the cloud, mobile, and Brillo to speak to one another. AnandTech described Weave as “an API framework meant to standardize communications between all these devices.”

Weave is a cross-platform common language. Andrei Frumusanu in AnandTech said from code-snippets given in the presentation it looked like a straightforward simple and descriptive syntax standard in JSON format. Google developers described Weave as “the IoT protocol for everything” and Brillo as “based on the lower levels of Android.”

Is Google’s Brillo and Weave component, then, the answer to developer, manufacturer and consumer needs for interoperability among smart objects? Some observers interpreted the announcement as good news, in that Google was now, in addition to Nest, to be an active player in the IoT space. Google was making its presence known in the march toward a connected device ecosystem.

Will this be the easiest platform for developers to build on? Will Brillo have the most reach over the long term? Or is the IoT to get tangled up in a “format war”? These were some questions posed in response to Google’s intro of Project Brillo.

Derek du Preez offered his point of view about standards and the IoT in diginomica, saying “we have learnt from history that there is typically room for at least a couple of mainstream OS’. But if Google wants to be the leader in this market, it needs to be the platform of choice for some of the early IoT ‘killer apps’. Its investment in Nest goes a long way to making this happen.” He added that given Google’s existing ecosystem and the amount of people across the globe that already own Android handsets, it had a good chance of taking on others and winning out.

The project page on the Google Developers site speaks about wide developer choice: “Since Brillo is based on the lower levels of Android, you can choose from a wide range of hardware platforms and silicon vendors.”

The site also said, “The Weave program will drive interoperability and quality through a certification program that device makers must adhere to. As part of this program, Weave provides a core set of schemas that will enable apps and devices to seamlessly interact with each other.”

References:http://phys.org/